A managing partner at a private equity firm with $3 billion in CRE assets. They’d spent $250,000 building a custom AI tool to automate investment memo creation. The technology worked flawlessly. Six months after launch, nobody was using it.

“We don’t understand what went wrong,” he told. “The AI is great. We trained it on our own memos. It produces quality output. But our team still writes memos the old way.”

He got asked one question: “Did you involve your investment team in the design process, or did you build it and then tell them to use it?”

Long silence.

“We wanted to surprise them with something amazing.”

There’s your problem.

The Pattern I Keep Seeing

Over the last two years, I’ve been involved in dozens of AI implementations across commercial real estate. Some have been transformative. Others have not entirely met our expectations. And after seeing this play out again and again, I can tell you exactly what separates success from failure.

It’s not the model you choose. It’s not your budget. It’s not whether you build custom tools or use off-the-shelf solutions. It’s not even the quality of your data.

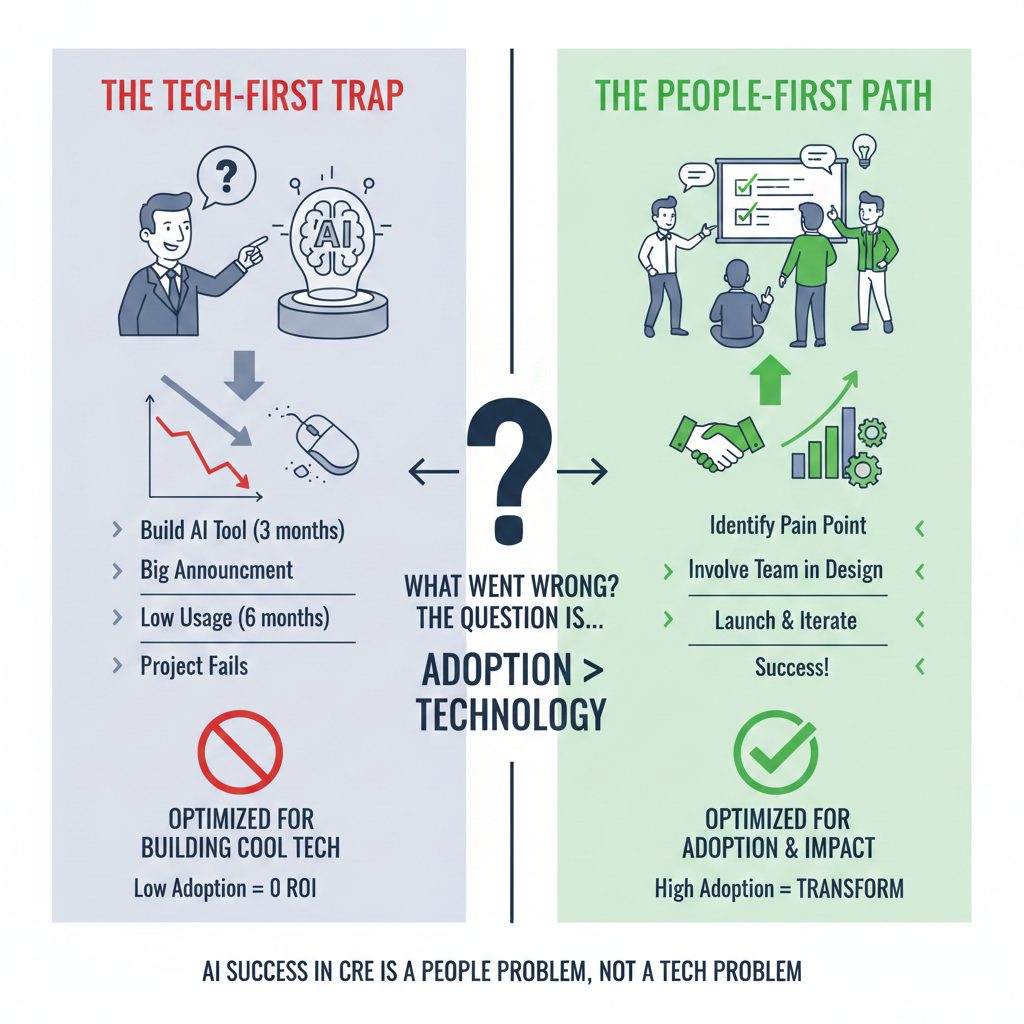

The number one predictor of AI success in CRE is whether you solved for adoption before you solved for technology.

The Technology Trap

Here’s what usually happens: A forward-thinking executive reads about AI’s potential. They get excited. They allocate the budget. They hire consultants or assign the project to IT. For three months, smart people build something technically impressive.

Then they unveil it to the team with a big presentation: “Look at this amazing AI tool we built! It’s going to save you hours every week!”

The team nods politely. They attend the training session. They try it once or twice. Then they quietly go back to doing things the way they’ve always done them.

Within six months, the expensive AI tool is a ghost town. The executive who championed it is frustrated. The team feels guilty but not guilty enough to change their workflow. And everyone concludes that “AI isn’t ready for real estate yet.”

But that’s not what happened. What happened is that you optimized for building cool technology instead of optimizing for getting people to use it.

What Successful Implementations Do Differently

Every successful AI implementation I’ve seen started with the same approach: they identified a pain point the team was already complaining about, then they involved the team in building the solution.

Not “we’ll build something and then train you on it.” But “help us design what would actually be useful to you.”

Let me give you an example. A multifamily operator that wanted to use AI for lease analysis. Instead of building something in a vacuum, they started by sitting down with their three most experienced asset managers and asking: “What takes you the longest when reviewing leases? What do you always have to look up? What mistakes have you seen junior analysts make?”

The answers were specific: “It takes forever to calculate effective rents when there’s free rent. I always have to pull up the renewal option clauses. Junior people miss escalation clauses buried in addendums.”

So that’s what they built. Not a general “lease analysis tool” but a specific workflow that calculated effective rents, highlighted renewal options, and flagged buried escalation clauses.

When they rolled it out, adoption was instant. Why? Because the tool solved real problems the team had been complaining about. It wasn’t imposed from above. It was built from the ground up based on their input.

The Change Management Nobody Talks About

AI implementation is change management more than it is technology implementation. You’re asking people to modify workflows they’ve used for years. You’re introducing uncertainty (What if the AI makes a mistake? What if I look dumb using it?). You’re disrupting comfortable routines.

If you don’t plan for that resistance, you will fail.

Here’s what works:

- Celebrate early wins publicly. When someone uses AI to save time or catch something they would have missed, share that story. “Sarah used the AI lease reviewer and found a renewal option that would have cost us $200K if we’d missed it.” Make the wins visible.

- Provide relentless support. In the first month, someone needs to be available to help every single time a team member tries to use the AI and gets stuck. Not “submit a ticket and we’ll get back to you.” But “Slack me right now and I’ll help you immediately.” Make the path of least resistance be using the AI, not abandoning it.

- Normalize failure. AI makes mistakes. Your team will make mistakes using AI. If the culture punishes those mistakes, people will stop using AI to avoid risk. If the culture treats them as learning opportunities, people will keep trying.

The Role Design Problem

Another major failure point is not thinking through how AI changes people’s roles. If you automate part of someone’s job without giving them new responsibilities, they’ll resist the AI because it feels like a threat.

One firm I know implemented AI for financial modeling. It could build a DCF model in 5 minutes instead of 3 hours. Great, right? Except the junior analysts whose job was building DCF models suddenly felt obsolete. Morale tanked. Some quit.

The fix? Redefine their role. “You’re no longer spending 3 hours building models. Now you’re spending that time testing assumptions, running sensitivities, and thinking critically about what the model is telling us.” Make the AI a tool that lets them do higher-value work, not a replacement that makes them redundant.

- Don’t build another “ghost town” tool—join the waitlist for CRE Agents to give your team a digital coworker platform they’ll actually want to use.

The “Perfect” Problem

I see a lot of firms delay AI implementation because they’re waiting for the perfect solution. They want the AI to be 100% accurate, to handle every edge case, to integrate seamlessly with all their existing systems.

That’s a recipe for never shipping anything.

The firms that succeed start with something simple that works 80% of the time. They use it, learn from it, and improve it. They accept that version one will be imperfect. They build a culture of iteration.

Perfect is the enemy of done. And done-but-imperfect beats perfect-but-never-launched every single time.

The Metrics That Matter

Most AI implementations track the wrong metrics. They measure model accuracy, response time, API uptime. Those things matter, but they’re not what determines success.

The metrics that actually matter are:

- Adoption rate: What percentage of your team is using the AI at least weekly?

- Retention rate: Of the people who try it once, how many are still using it 30 days later?

- Impact on workflow: Are deal timelines actually getting shorter? Is analysis quality improving?

- User satisfaction: When you ask your team if the AI is helpful, what do they say?

If your adoption rate is low, nothing else matters. The most technically impressive AI in the world is worthless if nobody uses it.

The Fix Is Simpler Than You Think

If you’re in the middle of a struggling AI implementation, here’s what to do:

- Stop. Pause the rollout. Acknowledge that something isn’t working.

- Ask why. Talk to your team. Not “do you like the AI” but “what would make you actually use this every day?” Listen to the real reasons they’re not using it.

- Fix the biggest obstacle. Is it too complicated? Simplify it. Is it solving the wrong problem? Pivot to a more painful problem. Is there no support? Add support.

- Relaunch with a small group. Don’t try to fix everything at once. Get 3-5 people using it successfully, then expand.

- Iterate publicly. Let your team see that you’re listening and improving based on their feedback. When someone suggests a feature and you add it, tell everyone.

The Bottom Line

Technology is the easy part. Culture is the hard part. Process change is the hard part. Getting people to trust something new is the hard part.

If you’re planning an AI implementation, spend more time thinking about adoption than you spend thinking about technology. Involve your team from day one. Start with a painful problem. Launch something imperfect. Support people relentlessly. Celebrate wins.

Do that, and the technology will work. Ignore it, and even the best AI in the world will gather dust.

I’ve seen this pattern enough times now to say it with confidence: AI doesn’t fail in CRE because the technology isn’t ready. AI fails in CRE because we treat it like a technology problem instead of a people problem.

Fix the people part, and the technology part takes care of itself.

Frequently Asked Questions About Why AI Fails In CRE And How To Fix It

The number one reason is that firms optimize for building cool technology instead of optimizing for getting people to use it. A typical pattern is that an executive gets excited, allocates budget, has IT or consultants build something impressive, then unveils it to the team expecting enthusiasm. The team tries it once or twice and quietly goes back to their old workflow. The problem is not that AI is not ready for real estate. The problem is that adoption was never planned for from the start.

Whether you solved for adoption before you solved for technology. It is not the model you choose, your budget, whether you build custom or use off-the-shelf, or even the quality of your data. Every successful AI implementation starts by identifying a pain point the team is already complaining about and then involving the team in building the solution. Not building something and then training them on it, but asking them to help design what would actually be useful.

You are asking people to modify workflows they have used for years, introducing uncertainty about mistakes and looking foolish, and disrupting comfortable routines. What works is celebrating early wins publicly so people see real examples, providing relentless support so someone is available immediately when a team member gets stuck, and normalizing failure so people keep experimenting instead of avoiding risk. If the culture punishes AI mistakes, people will stop using it entirely.

If you automate part of someone’s job without giving them new responsibilities, they will resist the AI because it feels like a threat. The fix is to redefine their role. Instead of spending three hours building a model, they now spend that time testing assumptions, running sensitivities, and thinking critically about what the model is telling them. Make AI a tool that lets people do higher-value work, not a replacement that makes them feel redundant.

No. Waiting for the AI to be completely accurate, handle every edge case, and integrate seamlessly with all existing systems is a recipe for never shipping anything. The firms that succeed start with something simple that works 80 percent of the time, use it, learn from it, and improve it. They accept that version one will be imperfect and build a culture of iteration. Done but imperfect beats perfect but never launched every single time.

Most firms track the wrong things like model accuracy, response time, and API uptime. The metrics that actually determine success are adoption rate, meaning what percentage of your team uses AI at least weekly; retention rate, meaning how many people who try it once are still using it 30 days later; impact on workflow, meaning whether deal timelines are actually getting shorter and analysis quality is improving; and user satisfaction, meaning what your team says when you ask if the AI is helpful. If adoption is low, nothing else matters.

Pause the rollout and acknowledge something is not working. Talk to your team and ask what would make them actually use it every day. Fix the single biggest obstacle, whether that is complexity, solving the wrong problem, or lack of support. Relaunch with a small group of three to five people and get them using it successfully before expanding. Iterate publicly so your team sees you are listening and improving based on their feedback.

CRE Agents is a digital coworker platform built specifically for commercial real estate teams. It is designed around the workflows your team already uses and the pain points they already have, so adoption happens naturally instead of being forced from above. The platform helps firms move from failed AI experiments to tools their people actually want to use every day.