Allow me to start with a tension I hear almost every week.

On one hand, everyone in commercial real estate is talking about AI. On the other, most firms I talk to cannot point to a single line item in the P&L that AI has actually changed.

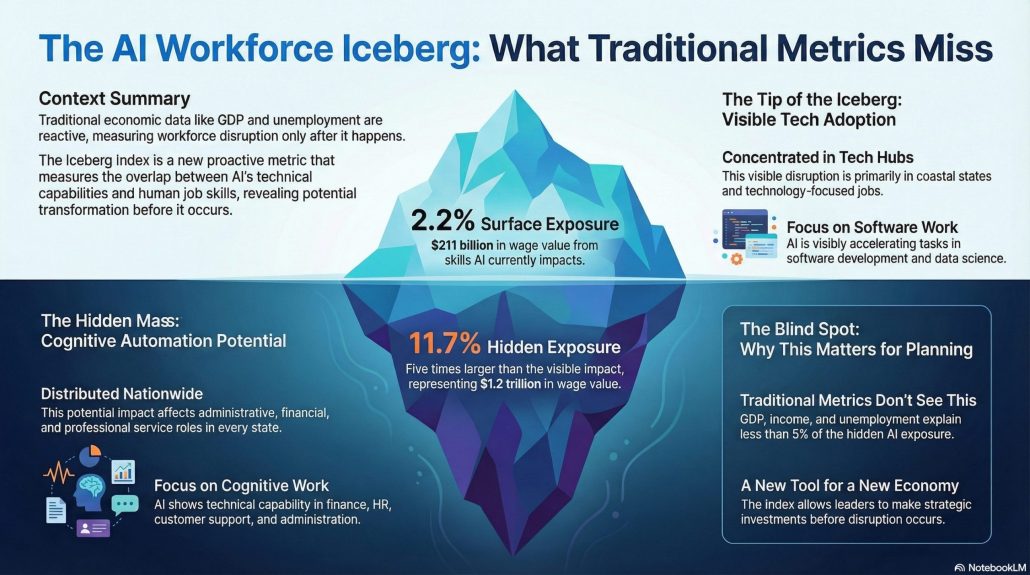

MIT has been studying that gap. Project Iceberg at MIT built a new metric, the Iceberg Index, to quantify where AI could matter in the economy, regardless of whether anyone has adopted it yet. When you combine that work with newer MIT research on generative AI pilots, a very clear story emerges:

- Visible AI adoption today sits on the tip of the iceberg.

- The real economic opportunity sits below the waterline, in the boring cognitive work that never makes the press release.

- Firms that treat AI as tools and workflows, rather than toys, are the ones that will actually capture value.

That story maps almost one‑to‑one onto what we are building with CRE Agents and how AI-native CRE teams will operate.

Let me unpack how.

What MIT’s Iceberg Index Actually Measures

The Iceberg Index is a skills-centered metric. It asks a simple question:

For each occupation, what fraction of the skills (by wage value) can AI systems technically perform today?

Important details:

- It is about technical exposure, not job loss.

- It measures skills and tasks, not job titles.

- It is independent of whether any adoption has happened.

MIT’s team does this by:

- Breaking jobs into underlying skills and tasks.

- Mapping current AI capabilities to those skills.

- Valuing that overlap using wage data.

The result is a kind of AI exposure heatmap across the economy.

A few key numbers from their work:

- AI that is visible in tech and computing accounts for about 2.2% of wage value, roughly 211 billion dollars in the United States.

- When you include cognitive work in admin, finance, and professional services, the technically exposed wage share jumps to about 11.7%, roughly 1.2 trillion dollars.

- Traditional macro indicators like GDP and unemployment explain less than 5% of the variation in this exposure measure.

In other words: most of the AI opportunity sits in white-collar workflows that look a lot like what CRE teams do all day. And you will not see that by staring at GDP releases.

The Other MIT Story: 95% Of GenAI Pilots Do Nothing

Separate but related MIT-affiliated work (through Project NANDA’s “GenAI Divide” report) looks at what happens when companies actually try to deploy generative AI.

Their headline: roughly 95% of GenAI pilots deliver no measurable P&L impact. Only about 5% produce material revenue or margin improvement.

If you work in CRE, that statistic feels familiar. I have seen the same pattern:

- Someone spins up a chatbot on property data.

- Another team builds a quick Excel formula helper.

- A broker plays with a marketing copy bot.

Six months later, nothing in the budget changed. The experiments were interesting. But they did not move rent, occupancy, fees, or overhead.

Taken together, the Iceberg Index and the GenAI Divide results say the same thing in different languages:

- AI’s technical reach is large and growing.

- Our ability to turn that reach into real business outcomes is still small.

The gap is not capability. It is coordination.

Why This Matters Specifically For CRE

Now think about a typical CRE shop, from acquisitions through asset management.

Daily work is dominated by:

- Parsing PDFs and broker OMs.

- Normalizing rent rolls and T‑12s.

- Reconciling model versions and assumptions.

- Writing IC memos and lender packages.

- Doing comp sets, location research, and rent surveys.

- Managing investor reporting and quarterly letters.

- Answering the same tenant and lender questions over and over.

Almost none of this shows up in a job title. All of it shows up in the Iceberg Index kind of analysis as cognitive, text‑heavy, rule‑bounded work that modern AI systems handle well.

If you asked MIT’s model where AI could matter inside real estate, it would light up exactly these workflows.

The question is not whether AI can help. It is whether your organization is structured to surface and rewire those submerged tasks in a way that you can measure.

That is where most CRE teams fall short.

CRE’s Iceberg Problem

The metaphor is almost too perfect.

- Tip of the iceberg:

- A flashy site chatbot that answers basic FAQs.

- An “AI underwriting assistant” that suggests cap rates without touching your actual model.

- A pretty dashboard that summarizes news headlines for your markets.

These are easy to show on a slide. They look modern. They rarely change underwriting, decision speed, or headcount.

- Below the waterline:

- The messy handoffs between analysts, associates, asset managers, and accounting.

- The 40 minutes wasted every time someone re‑builds a sensitivity table.

- The back‑and‑forth emails to obtain “one more data point” for an IC memo.

- The home‑grown checklists and macros that only one person truly understands.

The Iceberg Index is fundamentally about this submerged layer. It says: here is where AI can already mimic or assist the skills you are spending real wages on.

CRE, as an industry, has an iceberg problem because:

- Our visible processes, like final models and polished memos, look fine.

- Our invisible processes, the ones that eat time and attention, are where the economic drag sits.

If you run AI pilots on the visible layer and ignore the submerged layer, you will land in that 95% of pilots with no P&L impact.

How To Read The Iceberg Index As A CRE Operator

MIT built the Iceberg Index for policymakers and economists. As a CRE operator, you can treat it as a strategy lens.

Here is how I would apply it.

- Assume your work is more exposed than you think.

If you are in acquisitions, asset management, capital markets, research, or portfolio management, your job is a bundle of cognitive tasks that today’s models are reasonably good at: reading, summarizing, classifying, calculating, drafting, reconciling. - Stop asking “Will AI replace this role?”

That is the wrong question. The right question is:- Which 30–50% of tasks inside this role are technically automatable right now?

- How do we re‑architect the process so those tasks stop consuming analyst time?

- Prioritize by wage value and cycle time, not cool factor.

MIT values exposure using wages. In CRE, I would approximate that by asking:- Where does senior talent spend time doing junior work?

- Where do bottlenecks slow deals, approvals, or reporting?

Those are your highest value use cases.

- Treat workflows as products, not side projects.

A one‑off script that parses a single OM is interesting.

A reusable workflow that can parse every OM you see this year, write a deal factsheet, update the pipeline, and drop files into the right folders is a product. That is where value accrues. - Measure in terms your IC and LPs already understand.

- Hours per deal.

- Deals per analyst.

- Time from OM received to first pass underwriting.

- Time from quarter end to investor reporting sent.

Tie AI work to those metrics, or you will never see the benefit in the P&L.

This is exactly where a platform like CREAgents lives: taking the submerged, repeatable work that MIT’s methods flag as AI‑exposed and turning it into reliable workflows that run the same way every time.

How We Are Applying This At CRE Agents

At CRE Agents, we did not start with “What can AI do?”. We started with “Where do CRE professionals burn time on tasks that look like the bottom of the Iceberg Index?”.

That led us to build workflows such as:

- Deep Location Analysis

From a single address, the system builds a research URL, hits external data sources, reads satellite imagery context, pulls 1‑mile demographics, and returns a structured narrative plus a link and suggested next actions.

What used to be 30–60 minutes of manual research is now a few minutes of automated, repeatable work. - Expense Ratio Diagnostics

Feed in a T‑12 and unit count, and the workflow classifies operating expenses, produces per‑unit metrics, flags anomalies, and summarizes findings for underwriting.

Analysts no longer start from a blank Excel sheet, they start from a structured diagnostic. - Lease Abstraction And IC Memo Support

Upload a lease or OM, and agents parse, extract key facts, write summaries, and populate consistent templates for underwriting or committee review.

These are not “chatbots.” They are codified workflows that align with how CRE teams already work.

From an Iceberg Index perspective, each workflow is a way of saying:

- Here is a cluster of skills and tasks that are technically AI‑exposed.

- Here is a design that turns that exposure into a concrete, repeatable process.

- Here is how that process shows up in hours saved, errors reduced, or deals processed.

This is exactly the bridge that is missing in that 95% of failed GenAI pilots.

A Practical Playbook For AI-Native CRE Teams

If you want to act on MIT’s findings instead of just citing them in a slide deck, here is a simple starting point for your team.

- Inventory tasks, not roles.

Take one representative deal or quarter and write down every discrete task from OM arrival to final IC memo or investor letter. Who does what, in what system, in what order. - Mark what is AI-exposed today.

For each task, ask:- Does this involve reading and understanding text or numbers?

- Does it follow a pattern you could explain to a new analyst?

- Would you be comfortable if a junior did a first pass that you review?

If yes to those questions, chances are current models can handle a large share of the work.

- Pick one workflow worth turning into a product.

Criteria I like:- Happens at least weekly.

- Involves multiple handoffs.

- Touches documents and data you already store digitally.

- Has a clear definition of “done.”

- Design the “happy path” end‑to‑end.

- Input: what does the user give the system?

- Process: which steps should AI do, and which should a human review or approve?

- Output: what files, fields, and decisions need to exist at the end?

If you cannot draw this on a single page, simplify until you can.

- Instrument it.

At minimum, track:- Time from input to output.

- Number of human touches.

- Error or rework rate.

Run your old process and your AI‑assisted workflow in parallel for a handful of cases. Then compare.

- Only then, scale.

Once you see real improvement on one workflow, you have more than a “pilot”. You have a template for how to build, test, and scale AI workflows across the firm.

This is how you move from “we tested ChatGPT on a lease” to “we closed the gap between the tip and the bulk of our AI iceberg.”

Why CRE Professionals Should Care About The Iceberg Index

The MIT work is not about real estate specifically. But the pattern it reveals might fit CRE better than almost any other sector.

We are an information-heavy, relationship‑driven business where:

- Small timing advantages compound into real money.

- Process quality affects lender, partner, and LP confidence.

- Skilled people spend shocking amounts of time on low‑leverage tasks.

The Iceberg Index says: AI is already capable of assisting with a large share of those tasks. The GenAI Divide report says: almost nobody is monetizing that capability yet.

That is the opening.

If you treat AI experiments as short‑lived demos, your firm will sit in the 95%. You will have stories about pilots, no stories about returns.

If you treat AI as an opportunity to codify your workflows, encode your culture, and build a real automation system of record, you move into the 5% and beyond.

That is the bet we are making with CRE Agents.

If you are a CRE professional who wants to play the long game on AI, the most important thing you can do this year is start cataloging your own iceberg: the real work your team does that never makes it into the glossy materials. That is where the machines can already help, and where your advantage will come from if you choose to build.

Sources

- MIT Project Iceberg, “Project Iceberg – Coordinating the Human‑AI Future” and Iceberg Index overview: https://iceberg.mit.edu/

- MIT‑affiliated Project NANDA, “The GenAI Divide: State of AI in Business 2025” (pilot ROI statistics and P&L impact findings).

Frequently Asked Questions About The MIT Iceberg Index And AI In CRE

Tie every AI workflow to a metric your IC or LPs already track: hours per deal, deals screened per analyst, time from OM received to first-pass underwriting, or time from quarter end to investor reporting sent. Run your old process and AI-assisted workflow in parallel on a handful of real cases and compare directly. Avoid presenting AI value in terms of technology features. Present it as a before-and-after on cycle time, capacity, or error rate. That is the language that moves budget conversations.

Most pilots fail because they target visible, easy-to-demo use cases like chatbots or dashboard summaries instead of the submerged repetitive work that actually consumes analyst time and slows deals. The 5 percent that succeed treat AI workflows as products with defined inputs, clear human review steps, and measurable outputs rather than one-off experiments. CRE firms specifically fail when they pilot AI on polished final deliverables like models and memos instead of on the messy handoffs, data normalization, and document parsing underneath those deliverables.

Most teams can complete a useful task inventory in one to two weeks by walking through one representative deal or reporting cycle end to end. You do not need to catalog every edge case. Focus on tasks that happen at least weekly, involve reading or processing text and numbers, follow a pattern you could explain to a new analyst, and touch documents you already store digitally. That short list is usually enough to identify your first two or three high-value workflows worth turning into repeatable AI-assisted processes.